Docker Compose vs. Docker Swarm: using and understanding it

Docker Swarm allows nodes (hosts) to be grouped together into a common federation. This allows containers to be run in any number of instances on any number of nodes (hosts) in the network. In Docker Swarm, the basis for communication between hosts is an overlay network for services: Multi-host networking. As indicated in my article on moving web servers, I tested Docker Swarm as a possible option for a shared cross-host network and gathered some insights on this, which I briefly summarize here.

Swarm network

Docker Swarm provides a common backend network for all nodes, making it irrelevant on which node the containers are run. As an example, a web frontend could run on one host, its database on another. The web frontend can reach the database over the overlay network as if it were on the same host. Also, network access to the containers could be through all host IP addresses of the nodes, even through a host where the container is not even located.

Docker Volumes and Swarm

For permanent storage of container data, Docker Volumes or Bind-Mounts are known to be used. Docker Swarm takes care of the network, but initially not the data store: each host stores its own data.

Back, for example, with the database and when using Docker volumes without a Volume drivers or centralized storage. If a container is deployed to another host after it has already written data to a volume, the data of the volumes is not available there will not be available there, just as if the container was started for the first time. This may not matter for services that do not have persistent data, but not when deploying volumes. To make volumes available on all Swarm nodes, appropriate volume drivers, a shared storage or NAS can be used. Permanent synchronization of the folders with additional software, as an example with GlusterFS, would also be conceivable.

What is the difference between Docker Swarm and Docker Compose?

The way application are deployed with Docker Swarm or Docker Compose is very similar. The difference is mainly in the backend: while docker-compose deploys containers on a single Docker host, Docker Swarm distributes them across multiple nodes. In both cases, the application is defined in a YAML file. This file contains the image name, the configuration for each image, and for Docker Swarm, the number of deployed containers as well.

Test setup with Ubuntu server

In preparation, I installed Ubuntu Server, see: Install Ubuntu Server

A Docker setup is assumed on the server, see: Docker.

Initialize Docker Swarm

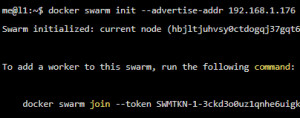

To turn a normal Docker installation into a Swarm environment, a simple command is enough: "docker swarm init".

me@l1:~$ docker swarm init --advertise-addr 192.168.1.176

Swarm initialized: current node (hbjltjuhvsy0ctdogqj37gqt6) is now a manager.

To add a worker to this swarm, run the following command:

docker swarm join --token SWMTKN-1-3ckd3o0uz1qnhe6uigk5wjosx5ugttqm8in9p2v691mje8063z-5p85da80dx8ngyop26t4cjkam 192.168.1.176:2377

To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.When initializing, the command is issued to add more worker nodes to the swarm. Additional hosts can be added to the swarm join after Docker installation with docker swarm join:

me@l2:~$ docker swarm join --token SWMTKN-1-433lfak5cy35idwysc7gslpne8vsuyjzvwdc2e48eo63qyb5mm-1osbaa8c497s6ms8x2ggn70xu 192.168.1.176:2377

[sudo] password for me:

This node joined a swarm as a worker.Those who have already initialized a Docker swarm and want to start over can force the creation of a new cluster by passing as option: --force-new-cluster:

docker swarm init --force-new-clusterDocker-compose command vs. Swarm stack deploy

Docker-compose is known to be used to launch different containers for an application: services. As an example, a web service and its database can be described in a docker-compose.yml. In addition, access between the containers of an application and access to the containers from outside can be specified in the docker-compose.yml file. All described containers can be downloaded, started or stopped as a unit with one command. If the command "docker compose up" is called from the folder with the docker-compose.yml file, the images for all mentioned services are downloaded and these are started.

WARNING: The Docker Engine you're using is running in swarm mode.

Compose does not use swarm mode to deploy services to multiple nodes in a swarm. All containers will be scheduled on the current node.

To deploy your application across the swarm, use `docker stack deploy`.When deploying with Swarm, instead of the "docker-compose" command, "docker stack deploy" is used. In addition to using docker-compose, a stack deploy can also include options for the number of instances and their replicas. In turn, Docker Compose without Swarm has certain properties that are unnecessary or not supported in Docker Swarm and its stack deploy.

Switching from Docker Compose to Docker Swarm.

For those who have been using Docker Compose without Swarm and are switching to Docker Swarm, you may need to make some adjustments to the docker-compose.yml files. Here are the options I came across when making the switch:

Restart and remove expose

Anyone using a docker-compose file in Swarm mode with docker stack deplay will be alerted to options that cannot or should not be used with Swarm:

Ignoring unsupported options: restart

Ignoring deprecated options:

expose: Exposing ports is unnecessary - services on the same network can access each other's containers on any port.

Accordingly, the "restart" and "expose" option should be removed from the docker-compose.yml file when using Swarm:

#restart: always

#expose:Container_name option is not supported

Ignoring deprecated options:

container_name: Setting the container name is not supported.With Docker Compose without Swarm, there is an option to give each container a name with the "container_name" option. Docker Swarm composes the name from the service and an option that is given when docker stack deploy is started. The name should be removed from the docker-compose.yml file for use with Swarm:

remove container_name:

services:

web:

#container_name: libenet_webThe name is passed along when the stack is started:

docker stack deploy -c docker-compose.yaml stackname

Docker Swarm builds the container name from the service, "web:" in the example, and the stack name.

stackname_ wird zum Service hinzugefügt.The final container name for this example would be: "stackname_web".

Using Docker Stack with Dockerfile

For my web service, I previously used the "build" option in Docker Compose, which created its own images based on a Dockerfile when started using "docker compose up":

version: '3'

services:

web:

container_name: laravel_web

build:

context: .

dockerfile: DockerfileSee: my Docker web server setup for Laravel - config in detail.

The "build:" option is no longer available with Docker stack:

You need to create the image with docker build (on the folder where the Dockerfile is located):Images must be created before using Docker Swarm and must be available in the local or a remote registry:

docker build -t imagenameAfter running the build command, the image (named imagename) is available in the local registry, allowing it to be used in the Docker compose file as follows:

version: '3'

services:

example-service:

image: imagename:latest

...Docker Swarm encrypted ingress network on web servers on the Internet?

Swarm management and control plane data is always encrypted, but application data between Swarm nodes is not.

ⒾApplication data between Swarm nodes is not encrypted by default. To encrypt this traffic on a particular overlay network, use the --opt encrypted flag on docker network create. This enables IPSEC encryption at the vxlan level. This encryption results in a non-negligible performance loss, so you should test this option before deploying it in production. see: https://docs.docker.com/engine/swarm/networking/ (Date: 09/28/2022)

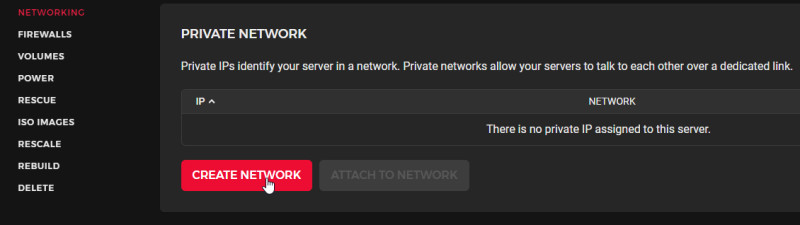

Anyone using Docker Swarm for servers that are located on the Internet should use a private network for communication between the servers. Certain cloud providers offer a way to create a private network between servers for this purpose:

By default, Swarm allows a connection for the Swarm backend on all addresses. To limit network traffic to the internal network, the --listen-addr option can be included. As an example, here is the command for initializing a Docker Swarm with one public and one private address:

docker swarm init --advertise-addr PublicIP --listen-addr PrivateIP:2377If the network between the hosts is untrusted, the traffic for the application data can also be encrypted. Encryption for a specific network can be enabled with the following command:

docker network create --opt encrypted --driver overlay --attachable my-attachable-multi-host-networkConclusion

Docker Swarm provides an easy way to distribute the Docker setup across multiple hosts. However, a central storage for the volumes is needed for sensible operation. See also: Moving web servers with Docker containers, theory and practice.Possibly also interesting: Docker Swarm, Portainer and Traefik combined.

({{pro_count}})

({{pro_count}})

{{percentage}} % positive

({{con_count}})

({{con_count}})