ZFS vs BTRFS - file system | deduplication and snapshots

In my search for file system features like snapshots or deduplication, I ended up with the file systems ZFS and later BTRFS. In the Linux environment, the ext4 file system is currently a quasi-standard, but file systems like ZFS and BTRFS offer considerable added value.

As an example, when using ZFS or BTRFS, several disks can form a pool. By means of Raid, the pool is protected against the failure of a disk and the storage space can be expanded at any time by means of additional disks.

BTRFS is integrated directly into the Linux kernel and, unlike ZFS, is not loaded via a kernel module.

Linux users have several options when choosing the file system. For Windows users this question does not arise, here the choice of the file system is to be answered with NTFS. NTFS has been extended over the years with features for which it was not originally designed. For example, NTFS can now compress, encrypt and create snapshots via "Volume Shadow Copy". In Windows Server, features such as Storage Spaces, shared access to a volume by various servers (Cluster Shared Volume: Hyper-V) and deduplication have been added. Deduplication is not inline in the case of NTFS, it is done by scheduled task afterwards.

Copy on Write (COW)

Both ZFS and BTRFS are, in contrast to NTFS, Copy on Write file systems. With copy-on-write file systems, changed blocks are not overwritten, but written to the free space and then updated in the metadata. This makes snapshots easier to implement. A snapshot represents the file system at an earlier point in time. Snapshots are therefore snapshots of the file system at a particular point in time. From a logical point of view, snapshots replace the need for a backup. Of course, to protect against hardware failure or a defective file system, a backup should still be created, even though the raid functionality built into ZFS and BTRFS protects against the failure of one disk when used with two or more disks.

(Inline) deduplication

Deduplication means that the file system does not rewrite blocks that have already been written to the disk, but references them.

If one and the same folder is copied several times, it only requires as much space as a folder takes up, since the block patterns were already stored with the first folder.

Most file systems can do this operation after the fact via a scheduled task, but a few file systems do the deduplication during the write operation, i.e. inline: Inline deduplication. Inline deduplication usually requires a relatively large amount of system resources, i.e. RAM and CPU performance.

ZFS

ZFS is a Copy-on-Write filesystem which was primarily developed for SUN Solaris. ZFS was later ported to FreeBSD and made available for Linux by means of the kernel module "ZFS on Linux". ZFS offers an integrated RAID functionality, includes its own volume manager and supports file systems up to 16EiB. The file system can be extended with additional disks at any time. Besides features like transparent compression, checksums and snapshots, ZFS also offers the possibility of inline deduplication.

Because of these possibilities I tested "ZFS on Linux" with Ubuntu.

With active inline deduplication, I had the problem with ZFS that the filesystem used 100% CPU during larger copy operations, the performance became slower and the computer then stopped responding. One should not forget at this point that most other file systems do not offer this possibility.

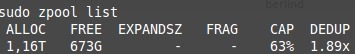

According to some reports on the Internet, a ZFS volume should only be filled up to 80%, otherwise the performance is massively worse. I also had problems with standby with ZFS. When waking up the computer, it could not load the ZFS volume from time to time, even when shutting down the computer it would hang. Because of this shortcoming I searched for alternative file systems with a similar feature set and found BTRFS.

BTRFS

BTRFS is now a fixed part of the Linux kernel and like ZFS, a copy-on-write filesystem. BTRFS offers, apart from the possibility of an inline deduplication, almost all features of ZFS. These include integrated RAID functionality, an included volume manager and support for file systems up to 16 EiB. Features include transparent compression, checksums and snapshots. Deduplication currently only works via commands after the fact. BTRFS is extremely flexible to extend. For example, disks can be added to or removed from the pool at any time after the fact. It is also possible to use disks of different sizes in a raid, since BTRFS does not create the raid at the disk level, but at the chunk level. In the case of Raid1 this means in detail that always 1Gbyte large Chunks on in each case another non-removable disk are mirrored. To protect the data from the failure of a disk (Raid1), a chunk is always stored simultaneously on two different disks. If different sized disks are used, BTRFS tries to keep the fill level of the different sized disks always the same, large disks are written accordingly often, small ones less. The capacity is halved when using Raid1. (see e.g. jrs-s. net/2014/02/13/btrfs-raid-awesomeness/)

BTRFS and Raid5/6

The Raidlevel can be changed with BTRFS on-line, Raid 5 and Raid 6 is at present in productive employment however still not to be recommended:

Current status Reliabillity Scrub + RAID56 Stability: mostly OK

Current Status Block group profile Stability: Unstable

see: btrfs.readthedocs.io/en/latest/btrfs-man5.html#raid56-status-and-recommended-practices

Backup and file versions

My motivation for an alternative file system results from the backup requirements. According to my idea a backup should cover the following points:

- Protection against deletion or overwriting of files.

- Protection against the failure of a hard disk

- Protection against the complete failure of the PC

One of the backup tools I use is the command line tool Rsync. Rsync copies all changes of the original data to my external hard disk when starting the PC.

On closer inspection, this backup strategy has a crucial catch: If I change or delete data, it will also be changed or deleted in the backup. Strictly speaking, this setup only protects against the failure of the source hard disk, but not against logical errors.

If I copy multiple versions to different folders with rsync, the disk will eventually be full. So I would have to pay for different version levels with additional hard disks.

Similar is the problem when using a backup program with full and incremental backups, also here I get sooner or later a space problem, because the data is twice on my backup disk after each full backup.

With a modern file system the space problem can be solved very easily, either by deduplication of the data or even better by snapshots.

Let's take a look at the first option:

Deduplication

With deduplication, blocks of data are stored only once and then used multiple times. If the same file or a file with a similar bit pattern is stored on the disk, existing data patterns are also used for the new file. Files that are already on the disk do not require any additional storage space when they are stored on the disk again.

For my backup, this would mean that I would copy my data to the backup drive over and over again. For each copy operation I could use a separate folder and name it according to the date. The deduplication ensures that only changed data needs additional storage space. This approach is not bad, but it needs time, CPU, memory and harddisk performance for each copy operation. That's why I ended up with snapshots:

Snapshots and Backups

Snapshots offer the possibility to keep different versions of the filesystem.  This solves the problem of my original rsync backup. I can implement the following backup strategy when using snapshots:

This solves the problem of my original rsync backup. I can implement the following backup strategy when using snapshots:

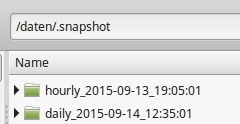

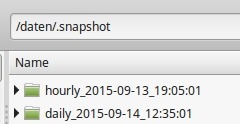

Rsync copies all my data to a backup disk. After the initial copy process, only changes need to be transferred (incremental). The backup disk reflects the time of the last backup. If now after each copy process a snapshot is created, I have the possibility to access also older backups. My data would thus be protected not only from hardware failure, but also from accidental deletion or overwriting.

A possible backup strategy

Assuming the computer is used as the primary storage target, snapshots can be created automatically and regularly when using BTRFS. The data can also be copied to a NAS automatically (e.g. with rsync).

When using Raid1, the primary file system is protected against the failure of a hard disk.

The snapshots protect against deletion or overwriting of files;

the copy to another device, from the failure of the file system or the complete primary hardware.

({{pro_count}})

({{pro_count}})

{{percentage}} % positive

({{con_count}})

({{con_count}})

THANK YOU for your review!

Questions / Comments

(sorted by rating / date) [all comments (best rated first)]

[3 additional Comments in Deutsch]

I've not used BTRFS, but I do use ZFS on both my desktop and server machines. I haven't experienced the hangups you do coming out of standby. But what I did want to point out is that ZFS offers incremental backups using the zfs send and zfs receive commands. I use these to snapshot the zvol (zfs volume) of a running virtual machine and incrementally back this up to my backup server, as well as incrementally backing up zfs datasets.